Rails Performance Needs an Overhaul

Browsers are getting faster; JavaScript frameworks are getting faster; MVC frameworks are getting faster; databases are getting faster. And yet, even with all of this innovation around us, it feels like there is massive gap when it comes to the end product of delivering an effective and scalable service as a developer: the performance of most of our web stacks, when measured end to end is poor at best of times, and plain terrible in most.

Browsers are getting faster; JavaScript frameworks are getting faster; MVC frameworks are getting faster; databases are getting faster. And yet, even with all of this innovation around us, it feels like there is massive gap when it comes to the end product of delivering an effective and scalable service as a developer: the performance of most of our web stacks, when measured end to end is poor at best of times, and plain terrible in most.

The fact that a vanilla Rails application requires a dedicated worker with a 50MB stack to render a login page is nothing short of absurd. There is nothing new about this, nor is this exclusive to Rails or a function of Ruby as a language - whatever language or web framework you are using, chances are, you are stuck with a similar problem. But GIL or no GIL, we ought to do better than that. Node.js is a recent innovator in the space, and as a community, we can either learn from it, or ignore it at our own peril.

Measuring End-to-End Performance

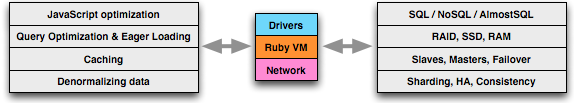

A modern web-service is composed of many moving components, all of which come together to create the final experience. First, you have to model your data layer, pick the database and then ensure that it can get your data in and out in the required amount of time - lots of innovation in this space thanks to the NoSQL movement. Then, we layer our MVC frameworks on top, and fight religious wars as developers on whose DSL is more beautiful - to me, Rails 3 deserves all the hype.

On the user side, we are building faster browsers with blazing-fast JavaScript interpreters and CSS engines. However, the driveshaft (the app server) which connects the two pieces (the engine: data & MVC), and the front-end (the browser + DOM & JavaScript), is often just a checkbox in the deployment diagram. The problem is, this checkbox is also the reason why the ‘scalability’ story of our web frameworks is nothing short of terrible.

It doesn't take much to construct a pathological example where a popular framework (Rails), combined with a popular database (MySQL), and a popular app server (Mongrel) produce less than stellar results. Now the finger pointing begins. MySQL is more than capable of serving thousands of concurrent requests, the app server also claims to be threaded, and the framework even allows us to configure a database pool!

It doesn't take much to construct a pathological example where a popular framework (Rails), combined with a popular database (MySQL), and a popular app server (Mongrel) produce less than stellar results. Now the finger pointing begins. MySQL is more than capable of serving thousands of concurrent requests, the app server also claims to be threaded, and the framework even allows us to configure a database pool!

Except that, the database driver locks our VM, and both the framework and the app server still have a few mutexes deep in their guts, which impose hard limits on the concurrency (read, serial processing). The problem is, this is the default behaviour! No wonder people complain about 'scalability'. The other popular choices (Passenger / Unicorn) “work around” this problem by requiring dedicated VMs per request - that's not a feature, that's a bug!

The Rails Ecosystem

To be fair, we have come a long way since the days of WEBrick. In many ways, Mongrel made Rails viable, Rack gave us the much needed interface to become app-server independent, and the guys at Phusion gave us Passenger which both simplified the deployment, and made the resource allocation story moderately better. To complete the picture, Unicorn recently rediscovered the *nix IPC worker model, and is currently in use at Twitter. Problem is, none of this is new (at best, we are iterating on the Apache 1.x to 2.x model), nor does it solve our underlying problem.

Turns out, while all the components are separate, and its great to treat them as such, we do need to look at the entire stack as one picture when it comes to performance: the database driver needs to be smarter, the framework should take advantage of the app servers capabilities, and the app server itself can't pretend to work in isolation.

Turns out, while all the components are separate, and its great to treat them as such, we do need to look at the entire stack as one picture when it comes to performance: the database driver needs to be smarter, the framework should take advantage of the app servers capabilities, and the app server itself can't pretend to work in isolation.

If you are looking for a great working example of this concept in action, look no further than node.js. There is nothing about node that can't be reproduced in Ruby or Python (EventMachine and Twisted), but the fact that the framework forces you to think and use the right components in place (fully async & non-blocking) is exactly why it is currently grabbing the mindshare of the early adopters. Rubyists, Pythonistas, and others can ignore this trend at their own peril. Moving forward, end-to-end performance and scalability of any framework will only become more important.

Fixing the "Scalability" story in Ruby

The good news is, for every outlined problem, there is already a working solution. With a little extra work, the driver story is easily addressed (MySQL driver is just an example, the same story applies to virtually every other SQL/NoSQL driver), and the frameworks are steadily removing the bottlenecks one at a time.

The good news is, for every outlined problem, there is already a working solution. With a little extra work, the driver story is easily addressed (MySQL driver is just an example, the same story applies to virtually every other SQL/NoSQL driver), and the frameworks are steadily removing the bottlenecks one at a time.

After a few iterations at PostRank, we rewrote some key drivers, grabbed Thin (evented app server), and made heavy use of continuations in Ruby 1.9 to create our own API framework (Goliath) which is perfectly capable of serving hundreds of concurrent requests at a time from within a single Ruby VM. In fact, we even managed to avoid all the callback spaghetti that plagues node.js applications, which also means that the same continuation approach works just as well with a vanilla Rails application.

It baffles me that this is not a solved problem already. The state of art in the end-to-end Rails stack performance is not good enough. We need to fix that.

Update: Thanks to Gregg Pollack and his awesome team at Envylabs, here is a recording of an updated and somewhat compressed version of the talk on this subject I gave at OSCON:

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on