Configuring & Optimizing WebSocket Compression

Good news, browser support for the latest draft of “Compression Extensions” for WebSocket protocol — a much needed and overdue feature — will be landing in early 2014: Chrome M32+ (available in Canary already), and Firefox and Webkit implementations should follow.

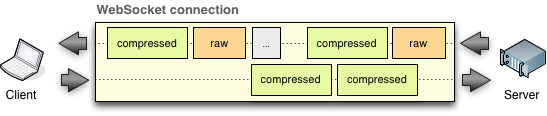

Specifically, it enables the client and server to negotiate a compression algorithm and its parameters, and then selectively apply it to the data payloads of each WebSocket message: the server can compress delivered data to the client, and the client can compress data sent to the server.

- Negotiating compression support and parameters

- Selective message compression

- Optimizing and scaling Deflate compression

- Deploying WebSocket compression

Negotiating compression support and parameters # ↑

Per-message compression is a WebSocket protocol extension, which means that it must be negotiated as part of the WebSocket handshake. Further, unlike a regular HTTP request (e.g. XMLHttpRequest initiated by the browser), WebSocket also allows us to negotiate compression parameters in both directions (client-to-server and server-to-client). That said, let's start with the simplest possible case:

GET /socket HTTP/1.1

Host: thirdparty.com

Origin: http://example.com

Connection: Upgrade

Upgrade: websocket

Sec-WebSocket-Version: 13

Sec-WebSocket-Key: dGhlIHNhbXBsZSBub25jZQ==

Sec-WebSocket-Extensions: permessage-deflateHTTP/1.1 101 Switching Protocols

Upgrade: websocket

Connection: Upgrade

Access-Control-Allow-Origin: http://example.com

Sec-WebSocket-Accept: s3pPLMBiTxaQ9kYGzzhZRbK+xOo=

Sec-WebSocket-Extensions: permessage-deflateThe client initiates the negotiation by advertising the permessage-deflate extension in the Sec-Websocket-Extensions header. In turn, the server must confirm the advertised extension by echoing it in its response.

If the server omits the extension confirmation then the use of permessage-deflate is declined, and both the client and server proceed without it - i.e. the handshake completes and messages won't be compressed. Conversely, if the extension negotiation is successful, both the client and server can compress transmitted data as necessary:

- Current standard uses Deflate compression.

- Compression is only applied to application data: control frames and frame headers are unaffected.

- Both client and server can selectively compress individual frames: if the frame is compressed, the

RSV1bit in the WebSocket frame header is set.

Selective message compression # ↑

Selective compression is a particularly interesting and a useful feature. Just because we've negotiated compression support, doesn't mean that all messages must be compressed! After all, if the payload is already compressed (e.g. image data or any other compressed payload), then running deflate on each frame would unnecessarily waste CPU cycles on both ends. To avoid this, WebSocket allows both the server and client to selectively compress individual messages.

How do the server and client know when to compress data? This is where your choice of a WebSocket server and API can make a big difference: a naive implementation will simply compress all message payloads, whereas a smart server may offer an additional API to indicate which payloads should be compressed.

Similarly, the browser can selectively compress transmitted payloads to the server. However, this is where we run into our first limitation: the WebSocket browser API does not provide any mechanism to signal whether the payload should be compressed. As a result, the current implementation in Chrome compresses all payloads - if you're already transferring compressed data over WebSocket without deflate extension then this is definitely something you should consider as it may add unnecessary overhead on both sides of the connection.

Optimizing and scaling Deflate compression # ↑

Compressed payloads can significantly reduce the amount of transmitted data, which leads to bandwidth savings and faster message delivery. That said, there are some costs too! Deflate uses a combination of LZ77 and Huffman coding to compress data: first, LZ77 is used to eliminate duplicate strings; second, Huffman coding is used to encode common bit sequences with shorter representations.

By default, enabling compression will add at least ~300KB of extra memory overhead per WebSocket connection - arguably, not much, but if your server is juggling a large number of WebSocket connections, or if the client is running on a memory-limited device, then this is something that should be taken into account. The exact calculation based on zlib implementation of Deflate is as follows:

compressor = (1 << (windowBits + 2)) + (1 << (memLevel + 9))

decompressor = (1 << windowBits) + 1440 * 2 * sizeof(int)

total = compressor + decompressor

Both peers maintain separate compression and decompression contexts, each of which require a separate LZ77 window buffer (as defined by windowBits), plus additional overhead for the Huffman tree and other compressor and decompressor overhead. The default settings are:

- compressor: windowBits = 15, memLevel = 8 → ~256KB

- decompressor: windowBits = 15 → ~44KB

The good news is that permessage-deflate allows us to customize the size of the LZ77 window and thus limit the memory overhead via two extension parameters: {client, server}_no_context_takeover and {client, server}_max_window_bits. Let's take a look under the hood...

Optimizing LZ77 window size # ↑

A full discussion of LZ77 and Huffman coding is outside the scope of this post, but to understand the above extension parameters, let's first take a small detour to understand what we are configuring and the inherent tradeoffs between memory and compression performance.

The windowBits parameter is customizing the size of the “sliding window” used by the LZ77 algorithm. Above video is a great visual demonstration of LZ77 at work: the algorithm maintains a “sliding window” of previously seen data and replaces repeated strings (indicated in red) with back-references (e.g. go back X characters, copy Y characters) - that's LZ77 in a nutshell. As a result, the larger the window, the higher the likelihood that LZ77 will find and eliminate duplicate strings.

How large is the LZ77 sliding window? By default, the window is initialized to 15 bits, which translates to 215 bits (32KB) of space. However, we can customize the size of the sliding window as part of the WebSocket handshake:

GET /socket HTTP/1.1

Upgrade: websocket

Sec-WebSocket-Key: ...

Sec-WebSocket-Extensions: permessage-deflate;

client_max_window_bits; server_max_window_bits=10- The client advertises that it supports custom window size via

client_max_window_bits - The client requests that the server should use a window of size 210 (1KB)

HTTP/1.1 101 Switching Protocols

Connection: Upgrade

Sec-WebSocket-Accept: ...

Sec-WebSocket-Extensions: permessage-deflate;

server_max_window_bits=10- The server confirms that it will use a 210 (1KB) window size

- The server opts-out from requesting a custom client window size

Both the client and server must maintain the same sliding windows to exchange data: one buffer for client → server compression context, and one buffer for server → client context. As a result, by default, we will need two 32KB buffers (default window size is 15 bits), plus other compressor overhead. However, we can negotiate a smaller window size: in the example above, we limit the server → client window size to 1KB.

Why not start with the smaller buffer? Simple, the smaller the window the less likely it is that LZ77 will find an appropriate back-reference. That said, the performance will vary based on the type and amount of transmitted data and there is no single rule of thumb for best window size. To get the best performance, test different window sizes on your data! Then, where needed, decrease window size to reduce memory overhead.

Optimizing Deflate memLevel # ↑

The memLevel parameter controls the amount of memory allocated for internal compression state: when set to 1, it uses the least memory, but slows down the compression algorithm and reduces the compression ratio; when set to 9, it uses the most memory and delivers the best performance. The default memLevel is set to 8, which results in ~133KB of required memory overhead for the compressor.

Note that the decompressor does not need to know the memLevel chosen by the compressor. As a result, the peers do not need to negotiate this setting in the handshake - they are both free to customize this value as they wish. The server can tune this value as required to tradeoff speed, compression ratio, and memory - once again, the best setting will vary based on your data stream and operational requirements.

Context takeover # ↑

By default, the compression and decompression contexts are persisted across different WebSocket messages - i.e. the sliding window from previous message is used to encode content of the next message. If messages are similar — as they usually are — this improves the compression ratio. However, the downside is that the context overhead is a fixed cost for the entire lifetime of the connection - i.e. memory must be allocated at the beginning and must be maintained until the connection is closed.

Well, what if we relaxed this constraint and instead allowed the peers to reset the context between the different messages? That's what “no context takeover” option is all about:

GET /socket HTTP/1.1

Upgrade: websocket

Sec-WebSocket-Key: ...

Sec-WebSocket-Extensions: permessage-deflate;

client_max_window_bits; server_max_window_bits=10;

client_no_context_takeover; server_no_context_takeover- Client advertises that it will disable context takeover

- Client requests that the server also disables context takeover

HTTP/1.1 101 Switching Protocols

Connection: Upgrade

Sec-WebSocket-Accept: ...

Sec-WebSocket-Extensions: permessage-deflate;

server_max_window_bits=10; client_max_window_bits=12

client_no_context_takeover; server_no_context_takeover- Server acknowledges the client “no context takeover” recommendation

- Server indicates that it will disable context takeover

Disabling “context takeover” prevents the peer from using the compressor context from a previous message to encode contents of the next message. In other words, each message is encoded with its own sliding window and Huffman tree.

The upside of disabling context takeover is that both the client and server can reset their contexts between different messages, which significantly reduces the total overhead of the connection when its idle. That said, the downside is that compression performance will likely suffer as well. How much? You guessed it, the answer depends on the actual application data being exchanged.

Optimizing compression parameters # ↑

Now that we know what we're tweaking, a simple ruby script can help us iterate over all of the options (memLevel and window size) to pick the optimal settings. For the sake of an example, let's compress the GitHub timeline:

$> curl https://github.com/timeline.json -o timeline.json

$> ruby compare.rb timeline.json

Original file (timeline.json) size: 30437 bytes

Window size: 8 bits (256 bytes)

memLevel: 1, compressed size: 19472 bytes (36.03% reduction)

memLevel: 9, compressed size: 15116 bytes (50.34% reduction)

Window size: 11 bits (2048 bytes)

memLevel: 1, compressed size: 9797 bytes (67.81% reduction)

memLevel: 9, compressed size: 8062 bytes (73.51% reduction)

Window size: 15 bits (32768 bytes)

memLevel: 1, compressed size: 8327 bytes (72.64% reduction)

memLevel: 9, compressed size: 7027 bytes (76.91% reduction)The smallest allowed window size (256 bytes) provides ~50% compression, and raising the window to 2KB, takes us to ~73%! From there, it is diminishing returns: 32KB window yields only a few extra percent (see full output). Hmm! If I was streaming this data over a WebSocket, a 2KB window size seems like a reasonable optimization.

Deploying WebSocket compression # ↑

Customizing LZ77 window size and context takeover are advanced optimizations. Most applications will likely get the best performance by simply using the defaults (32KB window size and shared sliding window). That said, it is useful to understand the incurred overhead (upwards of 300KB per connection), and the knobs that can help you tweak these parameters!

Looking for a WebSocket server that supports per-message compression? Rumor has it, Jetty, Autobahn and WebSocket++ already support it, and other servers (and clients) are sure to follow. For a deep-dive on the negotiation workflow, frame layouts, and more, check out the official specification.

P.S. For more WebSocket optimization tips: WebSocket chapter in High Performance Browser Networking.

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on