Load Balancing & QoS with HAProxy

A brand new Rails/Merb app you put together over a weekend, a pack of Mongrels, a reverse proxy (like Nginx), and you're up and running. Well, almost, what about that one request that tends to run forever, often forcing the user to double check their internet connection? Response time is king, and you always want to make sure that your site feel snappy to the user. Did you know that Flickr optimizes all of their pages to render in sub 250ms?

When you're fighting with response times, the worst thing you can possibly do is queue up another request behind an already long running process. Not only does the first request take forever, but everyone else must wait in line for it to finish as well! To mitigate the problem HAProxy goes beyond a simple round-robin scheduler, and implements a very handy feature: intelligent request queuing!

HAProxy Request Queuing

Usual (bad) scenario: 2 application servers, each capable of serving 5 concurrent requests, and a reverse proxy (Nginx, Apache, Pound, etc) in front, which distributes request in usual round-robin fashion (a,b,a,b, ...). A long running request hits our application, gets to the head of the app. server queue and begins processing. Alas, now everyone else queued on that server is waiting for it to finish! Usual scenario and poor user experience. (For the astute reader: yes, the concurrency on the server is usually greater than 1, but Rails in particular has some nasty mutex locks deep inside. Hence, a long running database call can very quickly render the entire app. server incapable of doing anything else.)

Usual (bad) scenario: 2 application servers, each capable of serving 5 concurrent requests, and a reverse proxy (Nginx, Apache, Pound, etc) in front, which distributes request in usual round-robin fashion (a,b,a,b, ...). A long running request hits our application, gets to the head of the app. server queue and begins processing. Alas, now everyone else queued on that server is waiting for it to finish! Usual scenario and poor user experience. (For the astute reader: yes, the concurrency on the server is usually greater than 1, but Rails in particular has some nasty mutex locks deep inside. Hence, a long running database call can very quickly render the entire app. server incapable of doing anything else.)

HAProxy (good) request queuing: instead of blindly passing requests to each app. server, in round-robin fashion, HAProxy allows us to limit the number of outstanding connections between the client and the server. For example, we can specify that each app server may only have two outstanding requests (which means that at most 4 jobs will be queued on our two application servers), and all other clients will wait in the HAProxy queue for the first available app. server. Beautiful! If one of our app. servers is taking a long time to respond, at most only one other request will be affected and everyone else will be processed by the first available server in our cluster.

Sample config to illustrate the functionality (download full config below):

listen app_a_proxy 127.0.0.1:8100

# - equal weights on all servers

# - queue requests on HAPRoxy queue once maxconn limit on the appserver is reached

# - minconn dynamically scales the connection concurrency (bound my maxconn) depending on size of HAProxy queue

# - check health of app. server every 20 seconds

server a1 127.0.0.1:8010 weight 1 minconn 3 maxconn 6 check inter 20000

server a1 127.0.0.1:8010 weight 1 minconn 3 maxconn 6 check inter 20000

listen app_b_proxy 127.0.0.1:8200

# - second cluster of servers, for another app or a long running tasks

server b1 127.0.0.1:8050 weight 1 minconn 1 maxconn 3 check inter 40000

server b2 127.0.0.1:8051 weight 1 minconn 1 maxconn 3 check inter 40000

server b3 127.0.0.1:8052 weight 1 minconn 1 maxconn 3 check inter 40000QoS with HAProxy and Nginx

Do you want to guarantee best performance to a sub section of your site? With a little extra work on your HTTP server (Nginx in this example), we can distribute the load between different Mongrel clusters, each hidden behind a HAProxy interface:

# ... nginx configuration

upstream fast_mongrels { server 127.0.0.1:8100; }

upstream slow_mongrels { server 127.0.0.1:8200; }

server {

listen 80;

location /main {

proxy_pass http://fast_mongrels;

break;

}

location /slow {

proxy_pass http://slow_mongrels;

break;

}

}

# ... nginx config continuesTake a close look at the earlier HAProxy config: app_b_proxy cluster has lower maxconn (local request queue) of 3 requests, and also features more app. servers to process the long-running tasks. This way, anytime a user requests '/slow', they will be sent to our dedicated app_b_proxy and the load will be spread between 3 dedicated servers. Meanwhile, the rest of our site is completely unaffected, as it is served by a different cluster!

Hidden Gems in Nginx and HAProxy

Make sure to read the detailed overview of the architecture and many great examples of using HAProxy in the wild, as well as, study the many configuration options available for more hidden goodies.

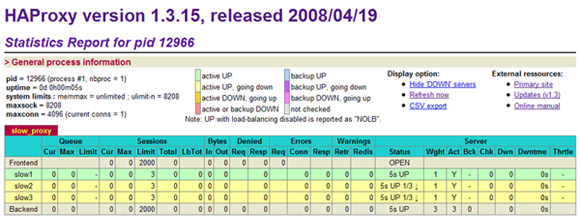

Last but not least, it's worth mentioning that you can get similar functionality in Nginx directly with the 'Fair Proxy Balancer' plugin (EngineYard is rumored to be running it). Having said that, HAProxy is more robust, gives you more knobs to tweak, and features a great admin interface!

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on