Secure UTF-8 Input in Rails

Approximately 64.2 percent of online users do not speak English. Ok, so once we adjust these numbers to take into account second-language speakers, this number won't be as large - let's say cut it in half, and that's being generous! Then, we're still looking at 30 percent of the total online population who, in all likelihood, cannot use your website due to simple encoding problems. Surprising? Unacceptable! On a more positive note, if you're using Rails, then as of 1.2.2+ you are already serving UTF-8 content and have almost transparent unicode support. Having said that, almost transparent is both its biggest strength and its biggest weakness - it's great that it works, but it hides some of the complexity behind multi-byte operations which we need to be aware of, and it also introduces some new security holes. It's a leaky abstraction, and we need to address some of these leaks.

Approximately 64.2 percent of online users do not speak English. Ok, so once we adjust these numbers to take into account second-language speakers, this number won't be as large - let's say cut it in half, and that's being generous! Then, we're still looking at 30 percent of the total online population who, in all likelihood, cannot use your website due to simple encoding problems. Surprising? Unacceptable! On a more positive note, if you're using Rails, then as of 1.2.2+ you are already serving UTF-8 content and have almost transparent unicode support. Having said that, almost transparent is both its biggest strength and its biggest weakness - it's great that it works, but it hides some of the complexity behind multi-byte operations which we need to be aware of, and it also introduces some new security holes. It's a leaky abstraction, and we need to address some of these leaks.

Making sense of Unicode/UTF-8 in Ruby

Unicode was developed to address the need for multilingual support of the modern world. Specifically, its aim was to enable processing of arbitrary languages mixed within each other - a rather ambitious goal once you realize the sheer number of possible characters (graphemes). Over the years, a number of new standards (UTF-7, UTF-8, CESU-8, UTF-16/UCS-2, etc.) have been developed to address this need, but UTF-8 emerged as the de facto standard. To fully appreciate some of the complexities of the task, and to better understand the leaks, I would strongly recommend that you take the time and read Joel Spolsky's The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets.

The underlying problem with UTF-8 is its multi-byte encoding mechanism. Remember your one line C/C++ character iterator loop? Kiss that goodbye, now we need additional logic. In Ruby-land, full unicode support is still lacking (by default), but it is expected that the long awaited Ruby 1.9/2.0 will become unicode-safe. In the meantime, Rails team decided to stop waiting for a miracle and introduced Multibyte support into its 1.2.2 release. There is some hand-twisting behind the scenes and I would recommend that you familiarize yourself with it: Multibyte for Rails.

Validating UTF-8 Input

First important distinction that you need to be aware of when switching to UTF-8 is that not every sequence of bytes is a valid UTF-8 string. This was never a problem before, in ISO-8859-1 everything was valid by default, but with UTF-8 we need to massage our input before we make this assumption. Culprits: old browsers, automated agents, malicious users.

To accomplish this, and to explore some of the reasoning behind the solution, check out Paul Battley's Fixing invalid UTF-8 in Ruby. He suggests the following filter:

require 'iconv'

ic = Iconv.new('UTF-8//IGNORE', 'UTF-8')

valid_string = ic.iconv(untrusted_string + ' ')[0..-2]Filtering UTF-8 Input

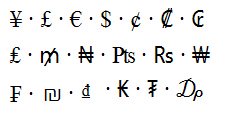

Once the string is validated we have to make sure that its contents match our expectations. As previously mentioned, UTF-8 does not encode renderings (glyphs), but uses an abstract notion of a character (grapheme), which is in turn translated into a code point. Hence, given the enormous size of the input space, accepting blind UTF-8 is usually a bad idea. Thankfully, UTF has a notion of categories which allows us to filter the input. To begin with, you will probably want to discard the entire Cx family (control characters), and then fine-tune other categories depending on your application:

Cc - Other, ControlCf - Other, FormatCs - Other, SurrogateCo - Other, Private UseCn - Other, Not Assigned (no characters in the file have this property)

Once released, Ruby 1.9 will include the Oniguruma regular expression library which is encoding aware. However, we don't have to wait for Ruby 1.9 (frankly, we can't), and thankfully we can install it as a gem and get back to our problem:

require 'oniguruma'

# Finall all Cx category graphemes

reg = Oniguruma::ORegexp.new("\p{C}", {:encoding => Oniguruma::ENCODING_UTF8})

# Erase the Cx graphemes from our validated string

filtered_string = reg.gsub(validated_string, '')It is also worth mentioning that Oniguruma has a number of other great features which are definitely worth exploring further.

Serving minty-fresh UTF-8

Once the data is validated and filtered, it has to be properly stored. MySQL usually defaults to latin1 encoding, and we have to explicitly tell it to use UTF-8:

# Method 1: in your mysql config (my.cnf)

default-character-set=utf8

# method 2: explicitly specify the character set

CREATE DATABASE foo CHARACTER SET utf8 COLLATE utf8_general_ci;

CREATE TABLE widgets (...) Type=MyISAM CHARACTER SET utf8;

# Finally, in your Rails DB config

production:

user: username

host: xxx.xxx.xxx.xxx

encoding: utf8 # make rails UTF-8 awareOnce the data is stored, we also need to serve it with proper headers. Rails should automatically do this for us, but sometimes we might have to massage it by specifying our own custom headers:

@headers["Content-Type"] = "text/html; charset=utf-8" # general purpose

@headers["Content-Type"] = "text/xml; charset=utf-8" # xml contentFinally, unicode also has one gotcha when it comes to caching: make sure your front-end server (apache, lighttpd, etc.) is either configured to serve utf headers by default, or add the following line at the very top of your template (once the browser encounters it, it drops everything it has done and is forced to start over):

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />Additional resources

In conclusion, unicode often appears to be a complicated beast, but once you figure out the basics, it won't seem as all that bad. For further reading, I would also recommend perusing through the following resources:

- I18n, M17n, Unicode, and all that by Tim Bray (Rubyconf 2006), and his follow up.

- Localization in Ruby on Rails by Till Vollmer

- The Definitive Guide to Web Character Encoding by Tommy Olsson

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on